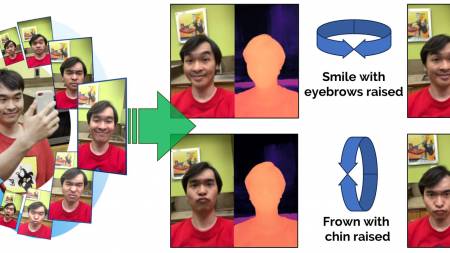

Dynamic Light Field Network (DyLiN) accommodates dynamic scene deformations, such as in avatar animation, where facial expressions can be used as controllable input attributes.

Researchers from Carnegie Mellon University and Fujitsu have developed a new method to convert 2D images to a 3D structure.

The work, led by Laszlo A. Jeni’s CUBE Lab in the School of Computer Science, represents a significant breakthrough in addressing a longstanding challenge in computer vision.

Harnessing artificial intelligence, Dynamic Light Field Network (DyLiN), handles non-rigid deformations and topological changes and surpasses the current static light field networks.

“Working with 2D images to create a 3D structure is a complex process that requires a deep understanding of how to handle deformations and changes. DyLiN is our answer to this problem, and it has already shown significant improvements over existing methods in terms of speed and visual fidelity,” said Jeni, a professor in the Robotics Institute.

DyLiN learns a deformation field from input rays to canonical rays and lifts them into a higher dimensional space to handle discontinuities. The team also introduced CoDyLiN, which enhances DyLiN with controllable attribute inputs. Both models were trained via knowledge distillation from pretrained dynamic radiance fields.

During testing, DyLiN exhibited superior performance on both synthetic and real-world datasets containing various non-rigid deformations. DyLiN matched state-of-the-art methods in terms of visual fidelity while being 25-71 times faster computationally. When tested on attribute-annotated data, CoDyLiN surpassed its teacher model.

“The strides made by DyLiN and CoDyLiN mark an exciting progression towards real-time volumetric rendering and animation,” Jeni said. “This improvement in speed without sacrificing fidelity opens up a world of opportunities in various applications.”

The research, supported by Fujitsu Research America, represents significant advancements in the field of 3D modeling and rendering. The implementation of these methodologies could revolutionize industries that heavily rely on deformable 3D models, such as virtual simulation, augmented reality, gaming, and animation. DyLiN and CoDyLiN can accurately render and analyze changes in human movement within a virtual 3D space based on 2D images. It is able to create a 3D avatar from actual images, enabling the transfer of facial expressions from a real person to their virtual counterpart.

The research was conducted by Jeni, Heng Yu, Joel Julin, Zoltan A. Milacski from CMU’s Robotics Institute, and Koichiro Niinuma from Fujitsu Research of America. The team presented its paper, “DyLiN: Making Light Field Networks Dynamic,” in June at the Conference on Vision and Pattern Recognition. More information is available on the project’s website.

Aaron Aupperlee | 412-268-9068 | aaupperlee@cmu.edu