Robots deployed in the home carry immense potential to improve people’s quality of life. Robots for cleaning, companionship, security, healthcare needs, and more can travel through home environments and assist with a multitude of tasks, making human-robot interaction an area of particular interest to many roboticists. However, despite the convenience of in-home robots, there lies a noise problem.

Robots, unlike humans, cannot detect how loud they are. They have no understanding of the impact their noise can have on the acoustic and physical environments in which they operate. While they can assist around the house, they can also interfere with daily life, such as when an occupant takes a video call, plays music, has an in-person conversation or has a sleeping child in the home.

To address the gap in acoustics and robotics research, a team at the Carnegie Mellon University Robotics Institute developed Audio Noise Awareness using Visuals of Indoor environments for NAVIgation (ANAVI), a framework for enabling home robots to estimate the noise levels in indoor environments using only visual information.

“When we are thinking about bringing robots into our personal environments, we have to consider not just how they are visually perceiving our environments, but also how humans around them will perceive the audio that they generate,” said Vidhi Jain, Ph.D. student and lead researcher. “They currently don’t have any understanding of that.”

Jain teamed up with fellow Ph.D. Rishi Veerapaneni and her advisor, assistant professor Yonatan Bisk, to develop a model that allows robots to take visual scans of the environment and then attempts to calculate how loud its actions will be in relation to the listener’s location. Since noise travel depends upon the physical composition of rooms, the estimations are based on the architecture and the absorbance of sound by different materials in the environment.

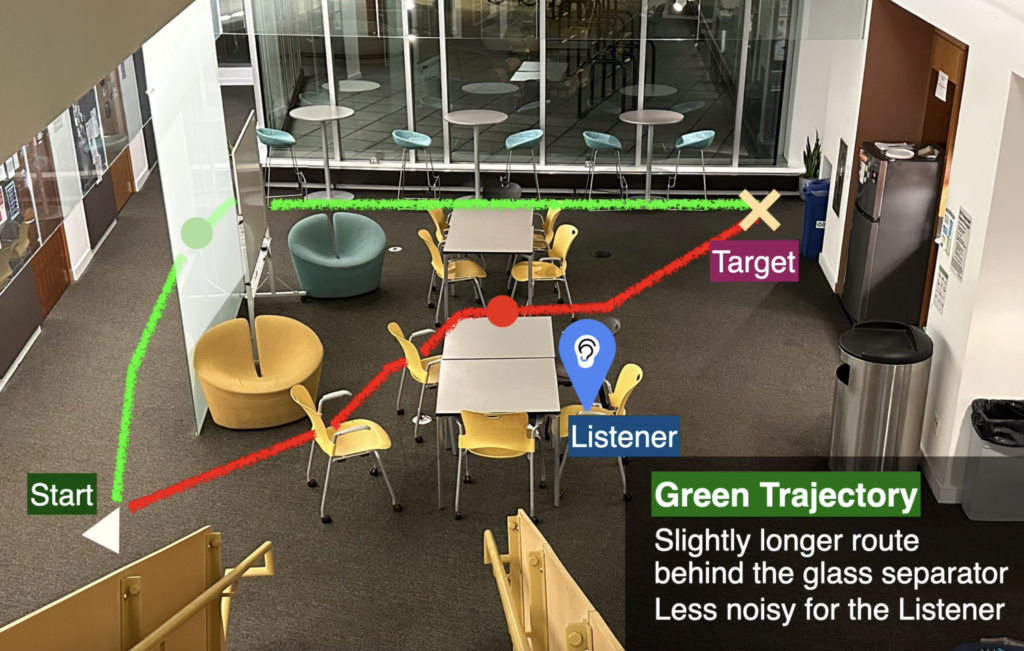

The green trajectory demonstrates the longer route a robot can take based on the listener’s location and potential noise levels.

The ANAVI framework uses an acoustic noise prediction (ANP) model to estimate audio noise levels. To gather data, the team conducted both simulated and real-world experiments that combine their ANP model with action acoustics, allowing wheeled (Hello Robot Stretch) and legged (Unitree Go2) robots to navigate in multiple environments while adhering to noise constraints. The initial results proved their ANP model outperforms baseline models by incorporating visual features, which improves its ability to predict sound intensity affected by architectural layout and physical materials in the environment.

“We wanted to train a model using simulation tools that can take real world scans of your home. Then we also used audio simulation to calculate how much sound produced at a particular location will be heard at another location based on architecture,” said Jain, who presented ANAVI at the Conference on Robotic Learning (CoRL) last November. “These are approximations, but they’re really good approximations for us to train our primitive model.”

The team’s goal is to inspire other researchers to eventually equip robots with the ability to make informed decisions about where they complete tasks based on their understanding of who is around the home, much like a human would be able to discern in these situations.

“If my partner was having a video call right now and I was cleaning the house, I would be mindful of whatever noise I create and how loud it will be,” said Jain. “I might go and clean in another room where I think that it won’t be that loud for the person.”

With robots becoming more and more popular in home settings, ANAVI helps inspire future research and developments regarding robot acoustics and their ability to interact with humans in a non-disruptive manner. By incorporating both visual and auditory data into robotic navigation systems, robots can integrate into human environments in a quieter, safer, and more considerate manner, speaking (softer) volumes to the future of human-robot interaction.

You can find the paper, code and helpful visuals for ANAVI on the project website.

For More Information: Aaron Aupperlee | 412-268-9068 | aaupperlee@cmu.edu