Robotics Institute researchers have developed a new method for autonomous aerial robot exploration and multirobot coordination inside abandoned buildings that could help first responders gather information and make better-informed decisions after a disaster.

An estimated 100 earthquakes worldwide cause damage each year. This damage includes collapsed buildings, downed electrical lines and more. For first responders, assessing the scene and focusing rescue efforts can be critical and risky.

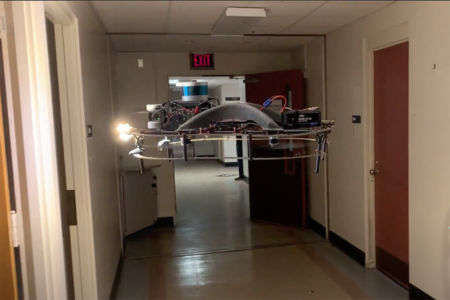

Researchers at Carnegie Mellon University’s Robotics Institute (RI) in the School of Computer Science have developed a new method for autonomous aerial robot exploration and multirobot coordination inside abandoned buildings that could help first responders gather information and make better-informed decisions after a disaster.

“A key idea of this research was avoiding redundancy in exploration,” said RI Ph.D. student Seungchan Kim. “Since this is multirobot exploration, coordination and communication among robots is vital. We designed this system so each robot explores different rooms, maximizing the rooms a set number of drones could explore.”

The drones focus on quickly detecting doors because meaningful targets, like people, are more likely to be in rooms rather than corridors. To find these targeted entryways, the robots process the geometric properties of their surroundings using an onboard lidar sensor. Gently hovering about six feet from the floor, the aerial robots transform the 3D lidar point cloud data into a 2D transform map. This map provides the layout of the space as an image made up of cells or pixels, which the robots then analyze for structural cues that signify doors and rooms. Walls appear as occupied pixels close to the drone, while an open door or passageway present as empty pixels. Researchers modeled the doors as saddle points, which allowed the robot to identify passageways and pass through them quickly. When a robot enters a room, it appears as a circle.

Kim explained that the researchers opted for a lidar sensor over a camera for two main reasons. First, the sensor uses less computing power than a camera. Second, conditions inside a collapsed building or at the site of a natural disaster might be dusty or smoky, which would impair the vision on a traditional camera.

No centralized base controls the robots. Rather, each robot makes decisions and determines optimal trajectories based on its understanding of the environment and communication with the other robots. The aerial robots share the list of doors and rooms they’ve explored with one another and use this information to avoid areas that have already been visited.

In addition to Kim, the research team included Micah Corah, a former RI postdoctoral fellow; John Keller, a senior robotics engineer in the RI; Sebastian Scherer, an associate research professor in the RI with a courtesy appointment in the Electrical and Computer Engineering Department; and Graeme Best, a researcher at the University of Technology Sydney.

The team presented their research last month at the 2024 IEEE International Conference on Robotics and Automation. Kim plans to continue researching multirobot exploration and semantic scene understanding for multirobot exploration and task coordination.