3:30 pm to 4:30 pm

Event Location: 1305 Newell-Simon Hall

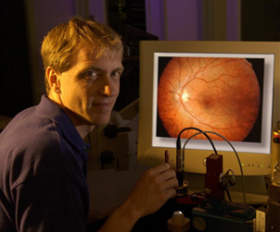

Bio: Gregory D. Hager is a Professor and Chair of Computer Science at Johns Hopkins University and the Deputy Director of the NSF Engineering Research Center for Computer Integrated Surgical Systems and Technology. His research interests include time-series analysis of image data, image-guided robotics, medical applications of image analysis and robotics, and human-computer interaction. He is currently a member of the governing board of the International Federation of Robotics Research and the the CRA Computing Community Consortium Council. He is a fellow of the IEEE for his contributions to Vision-Based Robotics.

Abstract: With the rapidly growing popularity of the Intuitive Surgical da Vinci system, robotic minimally invasive surgery (RMIS) has crossed the threshold from the laboratory to the real world. There are now hundreds of thousands of human-guided robotic surgeries performed every year, and the number is growing rapidly. This is a unique example of the larger paradigm of human-machine collaborative systems – namely systems of robots collaborating with people to perform tasks that neither can perform as effectively alone.

In this talk, I will describe our work toward developing effective human-machine collaborative teams. Our research largely focuses on two inter-related problems: 1) recognizing and responding to the intent of the user and 2) evaluating the effectiveness of their performance. We consider surgery to be composed of a set of identifiable tasks which themselves are composed of a small set of reusable motion units that we call “surgemes.” By creating models of this “Language of Surgery,” we are able to evaluate the style and efficiency of surgical motion. These models also lead naturally to methods for effective training of RMIS using automatically learned models of expertise, and toward methods for supporting or even automating component actions in surgery. I will close by briefly describing our aspirations for this work through a recently funded National Robotics Initiative consortium.

This talk includes joint work with Sanjeev Khudanpur and Rene Vidal.