Seminar

Imitating Shortest Paths in Simulation Enables Effective Navigation and Manipulation in the Real World

Abstract: We show that imitating shortest-path planners in simulation produces Stretch RE-1 robotic agents that, given language instructions, can proficiently navigate, explore, and manipulate objects in both simulation and in the real world using only RGB sensors (no depth maps or GPS coordinates). This surprising result is enabled by our end-to-end, transformer-based, SPOC architecture, powerful [...]

Teruko Yata Memorial Lecture

Human-Centric Robots and How Learning Enables Generality Abstract: Humans have dreamt of robot helpers forever. What's new is that this dream is becoming real. New developments in AI, building on foundations of hardware and passive dynamics, enable vastly improved generality. Robots can step out of highly structured environments and become more human-centric: operating in human [...]

Creating robust deep learning models involves effectively managing nuisance variables

Abstract: Over the past decade, we have witnessed significant advances in capabilities of deep neural network models in vision and machine learning. However, issues related to bias, discrimination, and fairness in general, have received a great deal of negative attention (e.g., mistakes in surveillance and animal-human confusion of vision models). But bias in AI models [...]

Reduced-Gravity Flights and Field Testing for Lunar and Planetary Rovers

Abstract: As humanity returns to the Moon and is developing outposts and related infrastructure, we need to understand how robots and work machines will behave in this harsh environment. It is challenging to find representative testing environments on Earth for Lunar and planetary rovers. To investigate the effects of reduced-gravity on interactions with granular terrains, [...]

Shedding Light on 3D Cameras

Abstract: The advent (and commoditization) of low-cost 3D cameras is revolutionizing many application domains, including robotics, autonomous navigation, human computer interfaces, and recently even consumer devices such as cell-phones. Most modern 3D cameras (e.g., LiDAR) are active; they consist of a light source that emits coded light into the scene, i.e., its intensity is modulated over [...]

Where’s RobotGPT?

Abstract: The last years have seen astonishing progress in the capabilities of generative AI techniques, particularly in the areas of language and visual understanding and generation. Key to the success of these models are the use of image and text data sets of unprecedented scale along with models that are able to digest such large [...]

Neural Field Representations of Mobile Computational Photography

Abstract: Burst imaging pipelines allow cellphones to compensate for less-than-ideal optical and sensor hardware by computationally merging multiple lower-quality images into a single high-quality output. The main challenge for these pipelines is compensating for pixel motion, estimating how to align and merge measurements across time while the user's natural hand tremor involuntarily shakes the camera. In [...]

Robot Learning by Understanding Egocentric Videos

Abstract: True gains of machine learning in AI sub-fields such as computer vision and natural language processing have come about from the use of large-scale diverse datasets for learning. In this talk, I will discuss how we can leverage large-scale diverse data in the form of egocentric videos (first-person videos of humans conducting different tasks) [...]

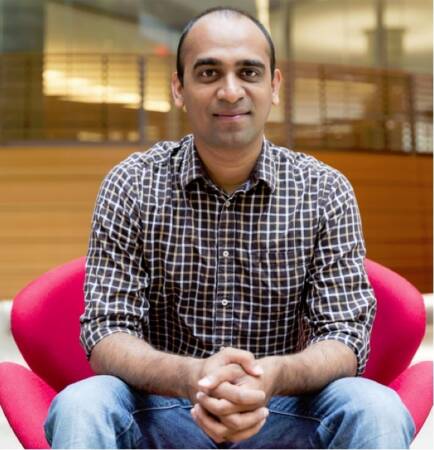

Special Seminar

Speaker: Abhisesh Silwal Title: Robotics and AI for Sustainable Agriculture Abstract: Production agriculture plays a critical role in our lives, providing food security and enabling sustainability. Despite its immense importance, it currently faces many challenges including shortage of farmworkers, increasing production costs, excess use of herbicides just to name a few. Robotics and artificial intelligence-based [...]