Seminar

Toward Human-Centered XR: Bridging Cognition and Computation

Abstract: Virtual and Augmented Reality enables unprecedented possibilities for displaying virtual content, sensing physical surroundings, and tracking human behaviors with high fidelity. However, we still haven't created "superhumans" who can outperform what we are in physical reality, nor a "perfect" XR system that delivers infinite battery life or realistic sensation. In this talk, I will discuss some of our [...]

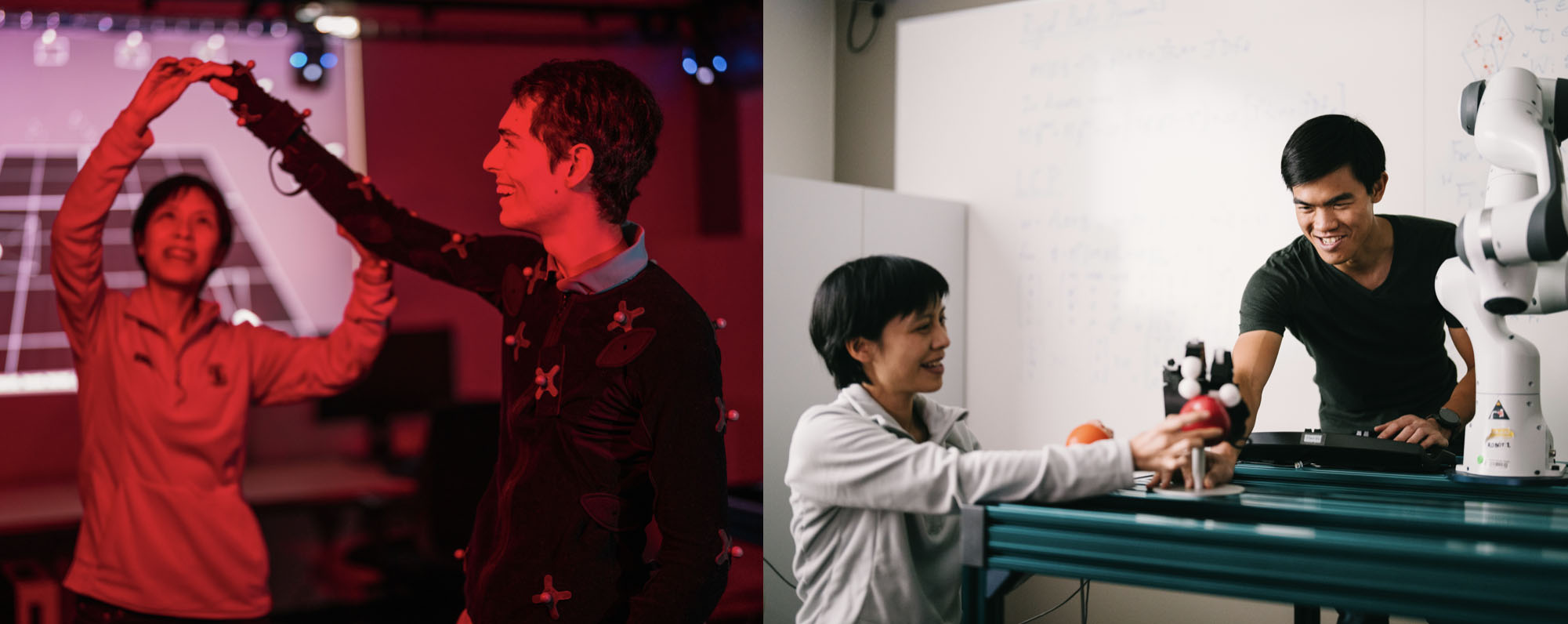

Carnegie Mellon Graphics Colloquium: C. Karen Liu : Building Large Models for Human Motion

Building Large Models for Human Motion Large generative models for human motion, analogous to ChatGPT for text, will enable human motion synthesis and prediction for a wide range of applications such as character animation, humanoid robots, AR/VR motion tracking, and healthcare. This model would generate diverse, realistic human motions and behaviors, including kinematics and dynamics, [...]

Teaching a Robot to Perform Surgery: From 3D Image Understanding to Deformable Manipulation

Abstract: Robot manipulation of rigid household objects and environments has made massive strides in the past few years due to the achievements in computer vision and reinforcement learning communities. One area that has taken off at a slower pace is in manipulating deformable objects. For example, surgical robotics are used today via teleoperation from a [...]

Zeros for Data Science

Abstract: The world around us is neither totally regular nor completely random. Our and robots’ reliance on spatiotemporal patterns in daily life cannot be over-stressed, given the fact that most of us can function (perceive, recognize, navigate) effectively in chaotic and previously unseen physical, social and digital worlds. Data science has been promoted and practiced [...]

Emotion perception: progress, challenges, and use cases

Abstract: One of the challenges Human-Centric AI systems face is understanding human behavior and emotions considering the context in which they take place. For example, current computer vision approaches for recognizing human emotions usually focus on facial movements and often ignore the context in which the facial movements take place. In this presentation, I will [...]

Foundation Models for Robotic Manipulation: Opportunities and Challenges

Abstract: Foundation models, such as GPT-4 Vision, have marked significant achievements in the fields of natural language and vision, demonstrating exceptional abilities to adapt to new tasks and scenarios. However, physical interaction—such as cooking, cleaning, or caregiving—remains a frontier where foundation models and robotic systems have yet to achieve the desired level of adaptability and [...]

Learning with Less

Abstract: The performance of an AI is nearly always associated with the amount of data you have at your disposal. Self-supervised machine learning can help – mitigating tedious human supervision – but the need for massive training datasets in modern AI seems unquenchable. Sometimes it is not the amount of data, but the mismatch of [...]

Why We Should Build Robot Apprentices And Why We Shouldn’t Do It Alone

Abstract: For robots to be able to truly integrate human-populated, dynamic, and unpredictable environments, they will have to have strong adaptive capabilities. In this talk, I argue that these adaptive capabilities should leverage interaction with end users, who know how (they want) a robot to act in that environment. I will present an overview of [...]

Toward an ImageNet Moment for Synthetic Data

Abstract: Data, especially large-scale labeled data, has been a critical driver of progress in computer vision. However, many important tasks remain starved of high-quality data. Synthetic data from computer graphics is a promising solution to this challenge, but still remains in limited use. This talk will present our work on Infinigen, a procedural synthetic data [...]

Imitating Shortest Paths in Simulation Enables Effective Navigation and Manipulation in the Real World

Abstract: We show that imitating shortest-path planners in simulation produces Stretch RE-1 robotic agents that, given language instructions, can proficiently navigate, explore, and manipulate objects in both simulation and in the real world using only RGB sensors (no depth maps or GPS coordinates). This surprising result is enabled by our end-to-end, transformer-based, SPOC architecture, powerful [...]