Towards Energy-Efficient Techniques and Applications for Universal AI Implementation

Abstract: The rapid advancement of large-scale language and vision models has significantly propelled the AI domain. We now see AI enriching everyday life in numerous ways – from community and shared virtual reality experiences to autonomous vehicles, healthcare innovations, and accessibility technologies, among others. Central to these developments is the real-time implementation of high-quality deep [...]

RI Faculty Business Meeting

Meeting for RI Faculty. Discussions include various department topics, policies, and procedures. Generally meets weekly.

Watch, Practice, Improve: Towards In-the-wild Manipulation

Abstract: The longstanding dream of many roboticists is to see robots perform diverse tasks in diverse environments. To build such a robot that can operate anywhere, many methods train on robotic interaction data. While these approaches have led to significant advances, they rely on heavily engineered setups or high amounts of supervision, neither of which [...]

Structure-from-Motion Meets Self-supervised Learning

Abstract: How to teach machine to perceive 3D world from unlabeled videos? We will present new solution via incorporating Structure-from-Motion (SfM) into self-supervised model learning. Given RGB inputs, deep models learn to regress depth and correspondence. With the two inputs, we introduce a camera localization algorithm that searches for certified global optimal poses. However, the [...]

Combining Physics-Based Light Transport and Neural Fields for Robust Inverse Rendering

Abstract: Inverse rendering — the process of recovering shape, material, and/or lighting of an object or environment from a set of images — is essential for applications in robotics and elsewhere, from AR/VR to perception on self-driving vehicles. While it is possible to perform inverse rendering from color images alone, it is often far easier [...]

Improving the Transparency of Agent Decision Making to Humans Using Demonstrations

Abstract: For intelligent agents (e.g. robots) to be seamlessly integrated into human society, humans must be able to understand their decision making. For example, the decision making of autonomous cars must be clear to the engineers certifying their safety, passengers riding them, and nearby drivers negotiating the road simultaneously. As an agent's decision making depends [...]

Robotic Climbing for Extreme Terrain Exploration

Abstract: Climbing robots can operate in steep and unstructured environments that are inaccessible to other ground robots, with applications ranging from the inspection of artificial structures on Earth to the exploration of natural terrain features throughout the solar system. Climbing robots for planetary exploration face many challenges to deployment, including mass restrictions, irregular surface features, [...]

Layout Design for Large-Scale Multi-Robot Coordination

Abstract: Today, thousands of robots are navigating autonomously in warehouses, transporting goods from one location to another. While numerous planning algorithms are developed to coordinate robots more efficiently and robustly, warehouse layouts remain largely unchanged – they still adhere to the traditional pattern designed for human workers rather than robots. In this talk, I will [...]

Perception amidst interaction: spatial AI with vision and touch for robot manipulation

Abstract: Robots currently lack the cognition to replicate even a fraction of the tasks humans do, a trend summarized by Moravec's Paradox. Humans effortlessly combine their senses for everyday interactions—we can rummage through our pockets in search of our keys, and deftly insert them to unlock our front door. Before robots can demonstrate such dexterity, [...]

Toward Human-Centered XR: Bridging Cognition and Computation

Abstract: Virtual and Augmented Reality enables unprecedented possibilities for displaying virtual content, sensing physical surroundings, and tracking human behaviors with high fidelity. However, we still haven't created "superhumans" who can outperform what we are in physical reality, nor a "perfect" XR system that delivers infinite battery life or realistic sensation. In this talk, I will discuss some of our [...]

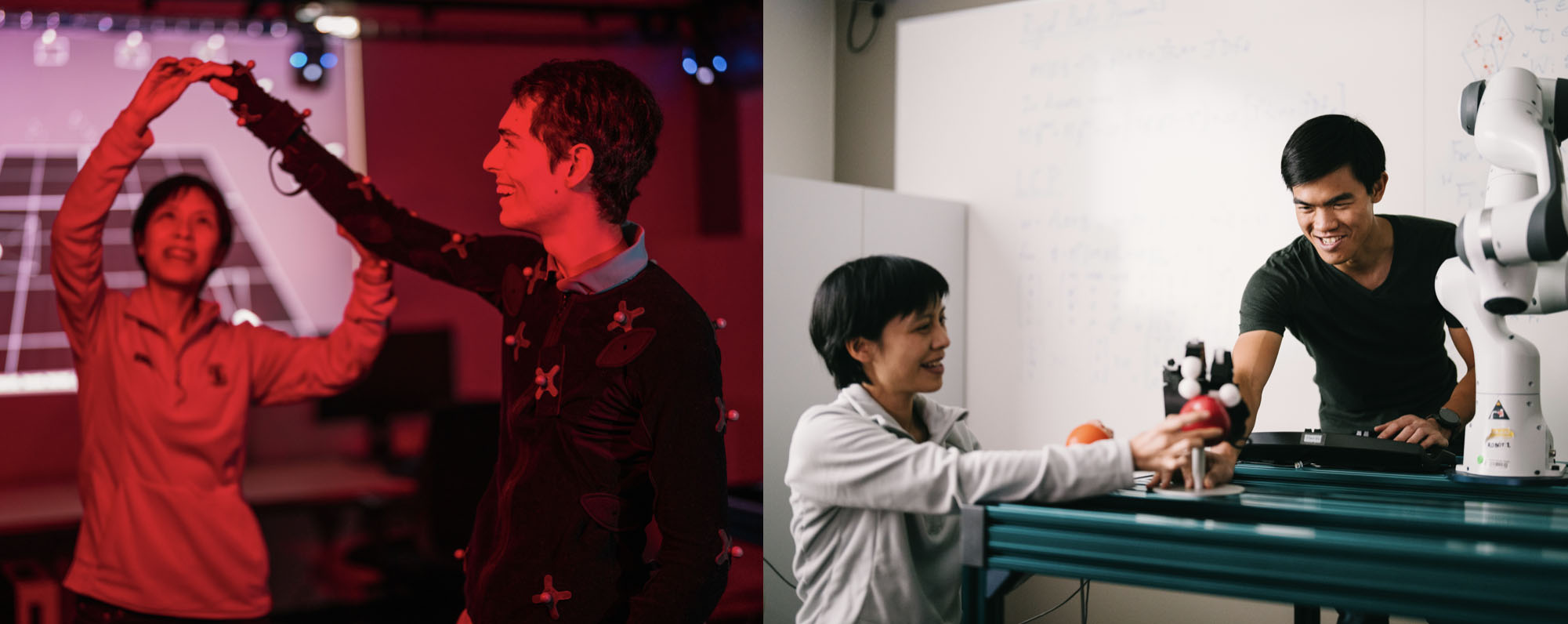

Carnegie Mellon Graphics Colloquium: C. Karen Liu : Building Large Models for Human Motion

Building Large Models for Human Motion Large generative models for human motion, analogous to ChatGPT for text, will enable human motion synthesis and prediction for a wide range of applications such as character animation, humanoid robots, AR/VR motion tracking, and healthcare. This model would generate diverse, realistic human motions and behaviors, including kinematics and dynamics, [...]

Teaching a Robot to Perform Surgery: From 3D Image Understanding to Deformable Manipulation

Abstract: Robot manipulation of rigid household objects and environments has made massive strides in the past few years due to the achievements in computer vision and reinforcement learning communities. One area that has taken off at a slower pace is in manipulating deformable objects. For example, surgical robotics are used today via teleoperation from a [...]

Zeros for Data Science

Abstract: The world around us is neither totally regular nor completely random. Our and robots’ reliance on spatiotemporal patterns in daily life cannot be over-stressed, given the fact that most of us can function (perceive, recognize, navigate) effectively in chaotic and previously unseen physical, social and digital worlds. Data science has been promoted and practiced [...]

RI Faculty Business Meeting

Meeting for RI Faculty. Discussions include various department topics, policies, and procedures. Generally meets weekly.

Emotion perception: progress, challenges, and use cases

Abstract: One of the challenges Human-Centric AI systems face is understanding human behavior and emotions considering the context in which they take place. For example, current computer vision approaches for recognizing human emotions usually focus on facial movements and often ignore the context in which the facial movements take place. In this presentation, I will [...]

[MSR Thesis Talk] SplaTAM: Splat, Track & Map 3D Gaussians for Dense RGB-D SLAM

Abstract: Dense simultaneous localization and mapping (SLAM) is crucial for numerous robotic and augmented reality applications. However, current methods are often hampered by the non-volumetric or implicit way they represent a scene. This talk introduces SplaTAM, an approach that leverages explicit volumetric representations, i.e., 3D Gaussians, to enable high-fidelity reconstruction from a single unposed RGB-D [...]

Language: You’ve probably heard of it, read it, written it, gestured it, mimed it… Why can’t robots?

Abstract: Language is how meaning is conveyed between humans, and now the basis of foundation models. By implication, it's the most important modality for all of AGI and will replace the entire robotics control stack as the most important thing for all of us to work on.

RI Faculty Business Meeting

Meeting for RI Faculty. Discussions include various department topics, policies, and procedures. Generally meets weekly.

Foundation Models for Robotic Manipulation: Opportunities and Challenges

Abstract: Foundation models, such as GPT-4 Vision, have marked significant achievements in the fields of natural language and vision, demonstrating exceptional abilities to adapt to new tasks and scenarios. However, physical interaction—such as cooking, cleaning, or caregiving—remains a frontier where foundation models and robotic systems have yet to achieve the desired level of adaptability and [...]

Learning with Less

Abstract: The performance of an AI is nearly always associated with the amount of data you have at your disposal. Self-supervised machine learning can help – mitigating tedious human supervision – but the need for massive training datasets in modern AI seems unquenchable. Sometimes it is not the amount of data, but the mismatch of [...]

Human Perception of Robot Failure and Explanation During a Pick-and-Place Task

Abstract: In recent years, researchers have extensively used non-verbal gestures, such as head and arm movements, to express the robot's intentions and capabilities to humans. Inspired by past research, we investigated how different explanation modalities can aid human understanding and perception of how robots communicate failures and provide explanations during block pick-and-place tasks. Through an in-person [...]

RI Faculty Business Meeting

Meeting for RI Faculty. Discussions include various department topics, policies, and procedures. Generally meets weekly.

Why We Should Build Robot Apprentices And Why We Shouldn’t Do It Alone

Abstract: For robots to be able to truly integrate human-populated, dynamic, and unpredictable environments, they will have to have strong adaptive capabilities. In this talk, I argue that these adaptive capabilities should leverage interaction with end users, who know how (they want) a robot to act in that environment. I will present an overview of [...]

Learning Distributional Models for Relative Placement

Abstract: Relative placement tasks are an important category of tasks in which one object needs to be placed in a desired pose relative to another object. Previous work has shown success in learning relative placement tasks from just a small number of demonstrations, when using relational reasoning networks with geometric inductive biases. However, such methods fail [...]

Robust Body Exposure (RoBE): A Graph-based Dynamics Modeling Approach to Manipulating Blankets over People

Abstract: Robotic caregivers could potentially improve the quality of life of many who require physical assistance. However, in order to assist individuals who are lying in bed, robots must be capable of dealing with a significant obstacle: the blanket or sheet that will almost always cover the person's body. We propose a method for targeted [...]

Exploration for Continually Improving Robots

Abstract: General purpose robots should be able to perform arbitrary manipulation tasks, and get better at performing new ones as they obtain more experience. The current paradigm in robot learning involves imitation or simulation. Scaling these approaches to learn from more data for various tasks is bottle-necked by human labor required either in collecting demonstrations [...]

Sparse-view 3D in the Wild

Abstract: Reconstructing 3D scenes and objects from images alone has been a long-standing goal in computer vision. We have seen tremendous progress in recent years, capable of producing near photo-realistic renderings from any viewpoint. However, existing approaches generally rely on a large number of input images (typically 50-100) to compute camera poses and ensure view [...]

Deep 3D Geometric Reasoning for Robot Manipulation

Abstract: To solve general manipulation tasks in real-world environments, robots must be able to perceive and condition their manipulation policies on the 3D world. These agents will need to understand various common-sense spatial/geometric concepts about manipulation tasks: that local geometry can suggest potential manipulation strategies, that policies should be invariant across choice of reference frame, [...]

RI Faculty Business Meeting

Meeting for RI Faculty. Discussions include various department topics, policies, and procedures. Generally meets weekly.

Toward an ImageNet Moment for Synthetic Data

Abstract: Data, especially large-scale labeled data, has been a critical driver of progress in computer vision. However, many important tasks remain starved of high-quality data. Synthetic data from computer graphics is a promising solution to this challenge, but still remains in limited use. This talk will present our work on Infinigen, a procedural synthetic data [...]