Autonomous Land Vehicle In a Neural Network

Project Head:

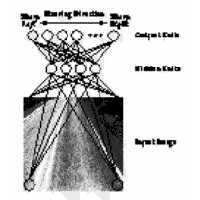

ALVINN is a perception system which learns to control the NAVLAB vehicles by watching a person drive. ALVINN’s architecture consists of a single hidden layer back-propagation network. The input layer of the network is a 30×32 unit two dimensional “retina” which receives input from the vehicles video camera. Each input unit is fully connected to a layer of five hidden units which are in turn fully connected to a layer of 30 output units. The output layer is a linear representation of the direction the vehicle should travel in order to keep the vehicle on the road.

Displaying 13 Publications

1998

Book Section/Chapter, The Handbook of Brain Theory and Neural Networks, July, 1998

1996

Tech. Report, CMU-RI-TR-96-31, Robotics Institute, Carnegie Mellon University, October, 1996

1995

Conference Paper, Proceedings of IEEE Intelligent Vehicles Symposium (IV '95), pp. 30 - 35, September, 1995

Conference Paper, Proceedings of (IROS) IEEE/RSJ International Conference on Intelligent Robots and Systems, Vol. 1, pp. 35 - 40, August, 1995

Conference Paper, Proceedings of (IROS) IEEE/RSJ International Conference on Intelligent Robots and Systems, Vol. 3, pp. 344 - 349, August, 1995

1994

Tech. Report, CMU-RI-TR-94-43, Robotics Institute, Carnegie Mellon University, December, 1994

Conference Paper, Proceedings of Government Microcircuit Applications Conference (GOMAC '94), pp. 358-362, November, 1994

1993

Conference Paper, Proceedings of 3rd International Conference on Intelligent Autonomous Systems (IAS '93), February, 1993

Book Section/Chapter, Robot Learning, 1993

1992

Conference Paper, Proceedings of Intelligent Vehicles Symposium (IV '92), pp. 391 - 396, June, 1992