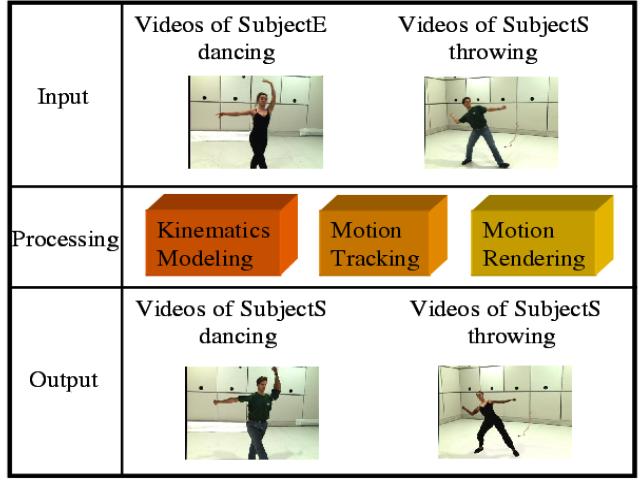

Consider the following scenario: in a room equipped with multiple cameras, we record videos of two people doing (separately) two different motions. Using the recorded videos of these two people, we produce videos of each person doing the other person’s motion. In this project, we have developed a vision-based system for doing exactly this; i.e. transfering the motion of one person to a second person. There are three steps in this human motion transfer system: (1) kinematic modeling (i.e. building a 3D model of each person with precise shape and accurate joint locations), (2) motion tracking (i.e. tracking the motion of each person from the recorded video) and finally (3) motion rendering (i.e. rendering images of the person performing the new motion using color information from the recorded video). These steps are illustrated in the following figure:

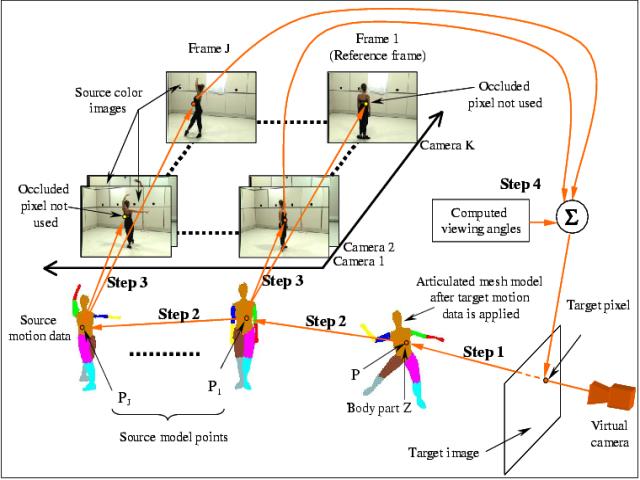

The details of the first two steps can be found in our webpage describing our Human Kinematic Modeling and Motion Capture system. The rendering algorithm is illustrated in the following figure:

The results of applying our system to three motions are as follows.

|

|

|

current head

current staff

current contact

past head

- Simon Baker