LIDAR and Vision Sensor Fusion for Autonomous Vehicle Navigation

Project Head: Takeo Kanade, Martial Hebert, and Daniel Huber

Autonomous vehicles generally rely on multiple sensors that use different sensing modalities in order to achieve reliable performance for navigation and obstacle avoidance tasks. In this research, we are studying the combination of laser based 3D sensors (LIDARs) with image-based sensors, including stereo and monocular imagery. One of the key ideas that we are exploring is the concept of tightly integrating these different sensing modalities throughout an algorithm’s various steps. Another central aspect of the research is the effect of time and temporal error due to synchronization errors between different sensing modalities. This project covers a number of specific research topics:

- Optimal LIDAR Sensor Configuration – When designing a system with multiple LIDARs, how should they be mounted and configured to optimize the system’s sensing capabilities?

- Tightly Integrated Stereo and LIDAR – How can sparse, but accurate 3D data from LIDAR improve the estimation of dense stereo algorithms in terms of accuracy and speed?

- Terrain Estimation using Space Carving Kernels – How can information about the ray extending from the sensor to the sensed surface be used to improve terrain estimation in unstructured environments?

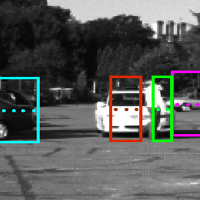

- Moving Object Detection, Modeling, and Tracking – How can vision and 3D LIDAR data be combined for detecting and tracking moving objects?

Displaying 3 Publications

2010

Conference Paper, Proceedings of (ICRA) International Conference on Robotics and Automation, pp. 3939 - 3945, May, 2010

2009

Conference Paper, Proceedings of (IROS) IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 2182 - 2189, October, 2009

Conference Paper, Proceedings of Robotics: Science and Systems (RSS '09), June, 2009

current head

current staff

current contact

past staff

- Hernan Badino

- Ankit Desai

- Raia Hadsell

- Ki Ho Kwak

- Umang Shah