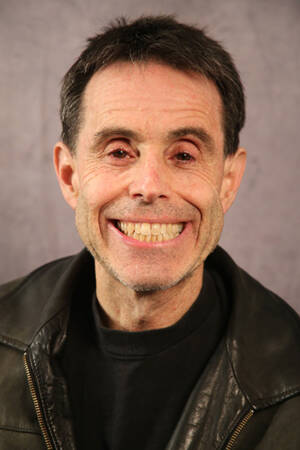

The Biorobotics group has embraced and advanced modular robot systems, first starting with our snake robots and now virtually all of our hardware and software systems. The obvious immediate benefit for modular robotic systems is that a core set of modules can be combined and recombined to form a customized robot, perhaps on a daily basis. They also allow for better serviceability of the robots because if a modular should fail, it simply needs to be replaced. We have discovered, in our work, that modularity also allows for rapid design of near-final systems, sometimes creating a tight design loop between the designer and the user.

Our group’s work toward modularity has taken a comprehensive view, ranging from low-level hardware and software support, to mid-level parameter estimation and control, and all the way to high-level artificial intelligence.Regardless of level or aspect of modularity development, scalability pervades all aspects of our research. Scalability can take on several forms; for example, the number of possible robot designs grows exponentially with the number of core modules (really modular types) in a given set of modules. The number of system level controllers, and the number of behaviors that depend on such controllers also grows exponentially, but even faster than the number of robot designs.

Our current research focuses on how to automatically synthesize the robot design and robot controllers for modular robots.

We have developed model-based reinforcement learning algorithms to train a reactive control policy for modular robots. The resulting network takes as input a robot design, and outputs a controller for that design. Given the large space of designs, it would be intractable to use data from all possible designs in the training process. We instead train the controller using a varied subset of designs, which teaches it how to adapt its behavior to the design. Then, the policy generalizes well to new designs; we show zero-shot transfer to an order of magnitude more designs outside of the training set.

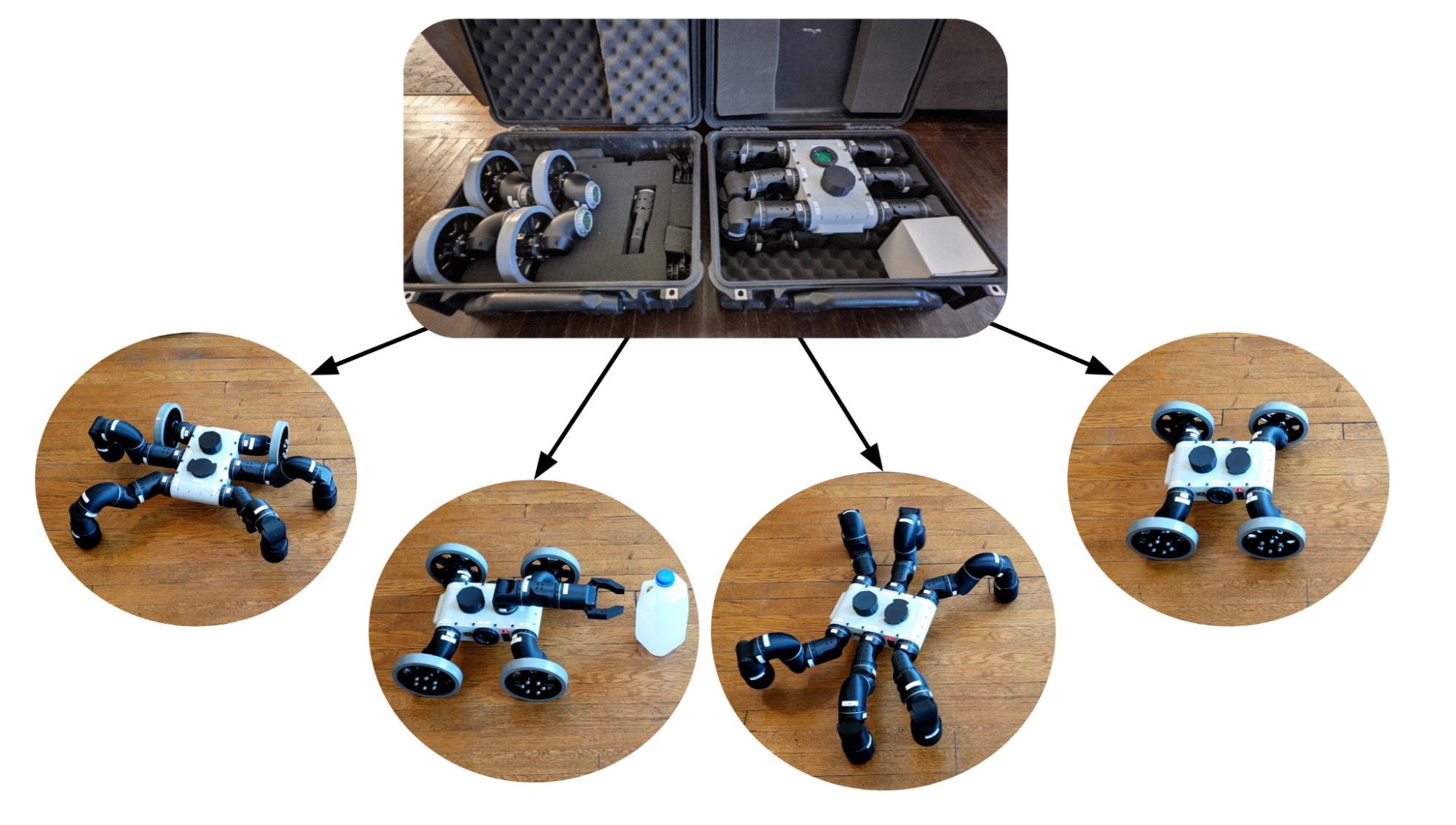

Our modular policy architecture: Top: the modules, depicted by the three boxes in the upper left, can be composed to form different designs. Each module has a deep neural network associated with it, indicated by the brain icons, which processes that module’s inputs (sensor measurements), outputs (actuator commands), and messages passed to and from its neighbors. Assembling those modules into a robot (bottom left) creates a graph neural network (GNN, bottom right), with a structure reflecting the design, where nodes and edges correspond to the modules and connections between them. The architecture is decentralized in form, but due to messages passed over the edges that influence the behavior of the nodes, the graph of networks can learn to compute coordinated centralized outputs.

Questions this project asks:

- What is in “scope” for the definition of a module? (I.e. Structure, power, actuation, sensing, computation, controller, and interfaces)

- A small set of modules can be used to construct a large number of designs. Can one control architecture be applied to any and all of these designs at once?

- Given a task, how can one determine the best robot design to achieve that task?

- Given a range of tasks, determine the set of modules whose combinations of modules form robots to achieve these tasks? How can one optimize the design of the individual modules within that set?

current head

current student

past past

- Stelian Coros