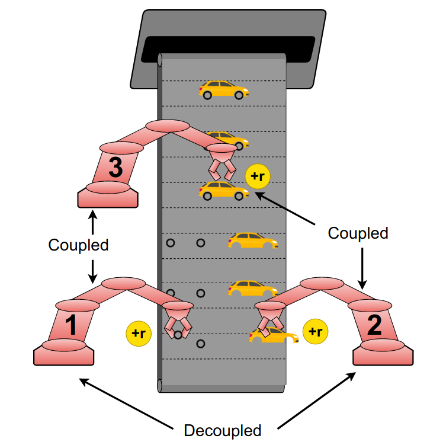

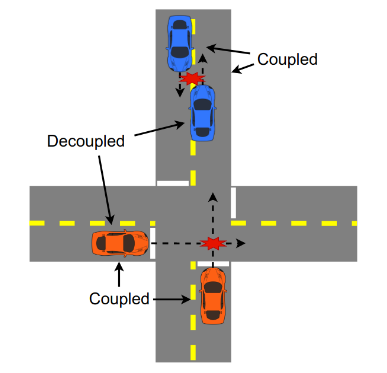

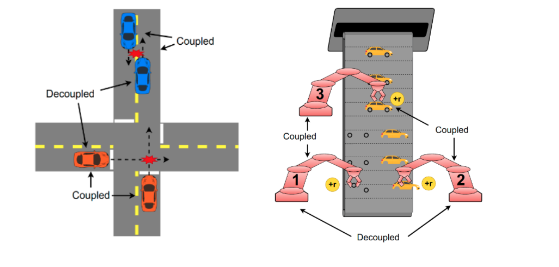

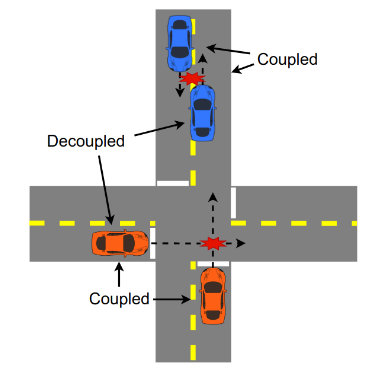

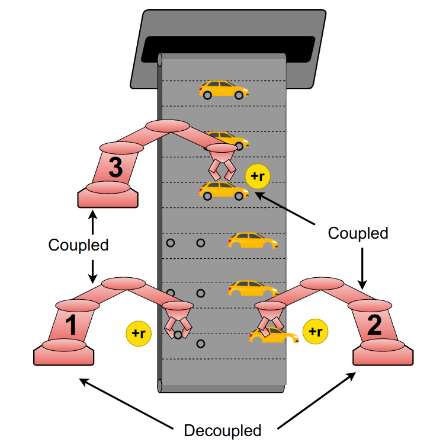

One of the preeminent obstacles to scaling multi-agent reinforcement learning to large numbers of agents is assigning credit to individual agents’ actions. This project seeks to address this credit assignment problem with an approach that we call partial reward decoupling (PRD). PRD attempts to decompose large cooperative multi-agent RL problems into decoupled subproblems involving subsets of agents, thereby simplifying credit assignment. Our initial work has empirically demonstrate that decomposing the RL problem using PRD in both actor-critic and proximal policy optimization algorithms results in lower variance policy gradient estimates, which improves data efficiency, learning stability, and asymptotic performance across a wide array of multi-agent RL tasks, compared related approaches that do not use PRD, such as counterfactual multi-agent policy gradient (COMA), a state-of-the-art MARL algorithm.

[1] Freed, Benjamin, et al. “Learning Cooperative Multi-Agent Policies With Partial Reward Decoupling.” IEEE Robotics and Automation Letters 7.2 (2021): 890-897. https://ieeexplore.ieee.org/abstract/document/9653841?casa_token=938Xzf30YigAAAAA:vWmsqEbPmBwpxf-Z1WSp5RVodX6N37ye3OPPqMvBpvSuZ9c6rRTiYV7_l00RlriAhv72itZfMw