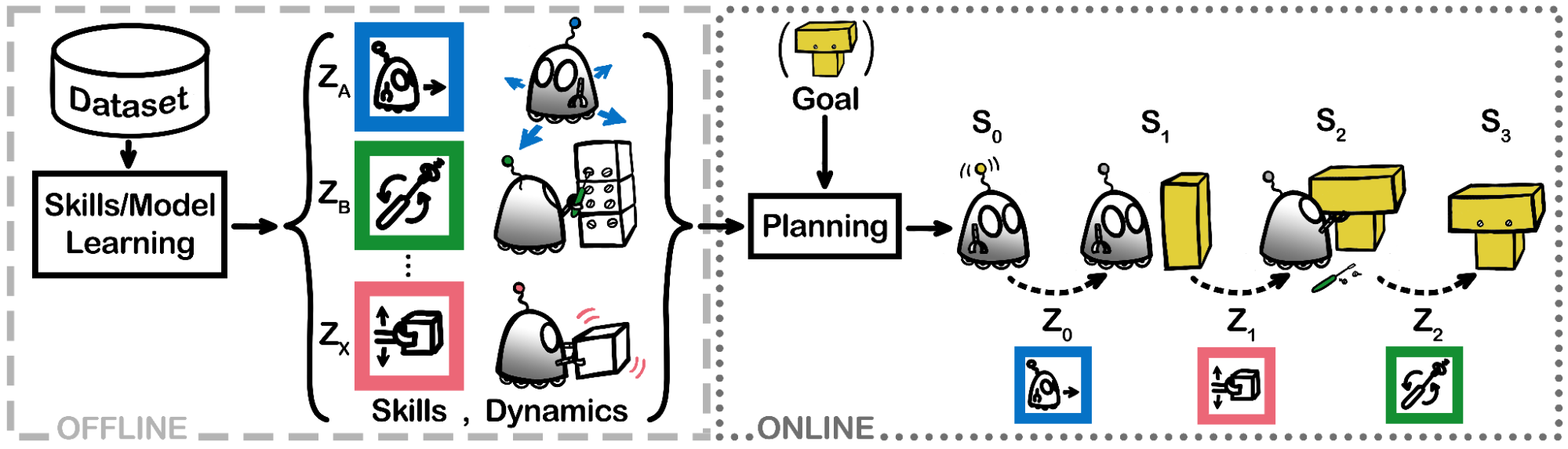

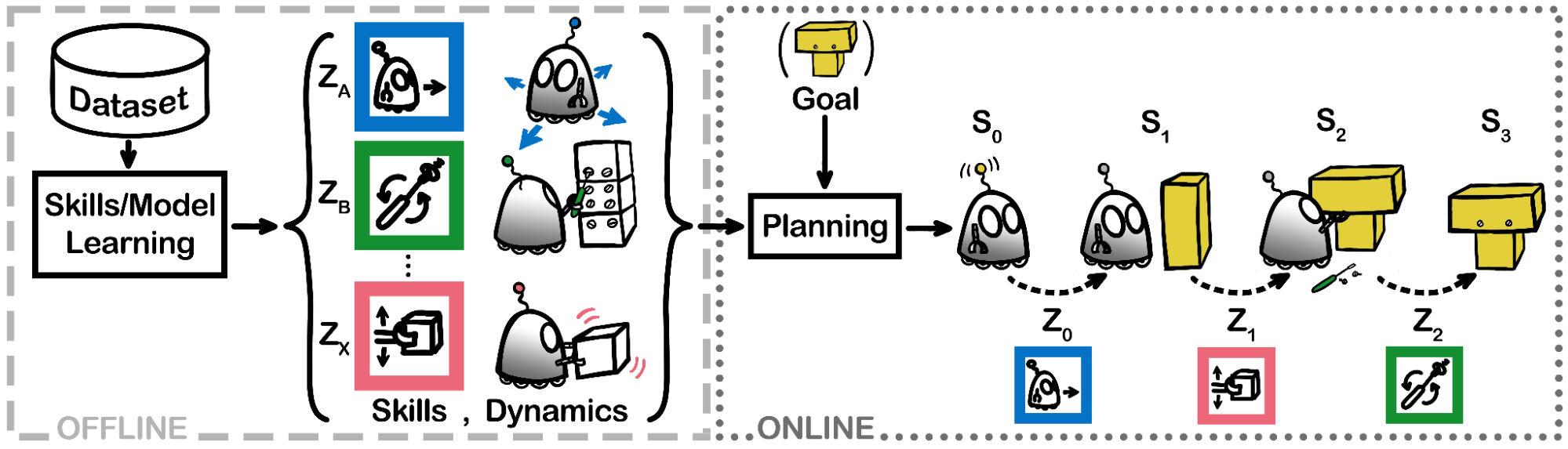

Agents that can build temporally abstract representations of their environment are better able to understand their world and make plans on extended time scales, with limited computational power and modeling capacity. However, existing methods for automatically learning temporally abstract world models usually require millions of online environmental interactions and incentivize agents to reach every accessible environmental state, which is infeasible for most real-world robots both in terms of data efficiency and hardware safety. This project seeks to automatically learn skill sets and temporally-abstract, skill-conditioned world dynamics models purely from offline data, enabling sequences of skills to be rapidly planned to solve new tasks. Initial experiments show that our skill-planning approach performs comparably to or better than a wide array of state-of-the-art offline RL algorithms on a number of simulated robotics locomotion and manipulation benchmarks, while offering a higher degree of robustness and adaptability to new goals.

Recently machine learning techniques, specifically Reinforcement Learning (RL), are being used rigorously to enable robots to perform in a desired manner. Two major challenges continue to prevent the widespread deployment of RL solutions. First, no safety guarantees are provided and so these techniques are not used in safety critical systems. Secondly, most of the successes shown by these learning techniques are only in simulation and the behavior upon transfer to real world settings is quite poor. The aim of this project is to borrow techniques from control theory to provide safety guarantees, smooth sim-to-real transfer, accelerated learning, and robustness. Currently, we build upon the work done in Dream to Control which is a model based reinforcement learning SOTA algorithm and uses only images, rewards and actions as inputs and learns a model in a small latent space as well as actions.