Stereo and LIDAR are two very different technologies for producing 3D data. The methods are, to a certain degree, complimentary, because the strengths of each modality, as well as their weaknesses, occur in different situations. For example, LIDAR provides high accuracy range measurements with uncertainty that increases linearly with range and is relatively low even at long ranges. Stereo, on the other hand, has quadratically increasing uncertainty, and can be better at close range than LIDAR, but worse at long range. Stereo has difficulty estimating ranges for surfaces with little or no texture or with repeating patterns of texture, whereas LIDAR does not have this difficulty. Stereo generally provides high-resolution estimates of range (in the image plane dimension), while LIDAR typically has a much lower angular resolution.

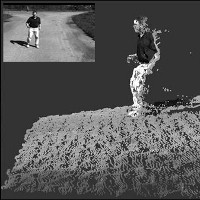

Given the complimentary nature of LIDAR and stereo, it is natural to fuse this information to obtain better 3D shape estimates. Such capability can be very useful for various tasks in autonomous vehicles, such as terrain modeling and obstacle detection. Typically, stereo and LIDAR data are fused by first computing the 3D information for each modality and then combining the information at the end. Our hypothesis is that improvements in stereo-LIDAR fusion can be achieved by coupling the two modalities more tightly. Instead of waiting until the end to fuse the information, we look for ways in which the two sources of information can be combined throughout the stereo process. This, in effect, allows us to achieve the high resolution of stereo, while maintaining the high accuracy and general range sensing capabilities of LIDAR.